Introduction

San Francisco, April 2 (Reuters) - MLCommons has introduced two new benchmarks designed to assess the performance of leading hardware and software for AI applications.

Context

Since OpenAI launched ChatGPT over two years ago, chip manufacturers have shifted focus to developing hardware capable of efficiently running the code that supports AI tools for millions of users. With a growing number of queries needed to operate AI applications like chatbots and search engines, MLCommons created two updated versions of its MLPerf benchmarks to measure processing speed.

Developments

- One benchmark is based on Meta's Llama 3.1 405-billion-parameter AI model, focusing on general question answering, math, and code generation. This new format evaluates a system's capability to handle large queries and integrate data from various sources.

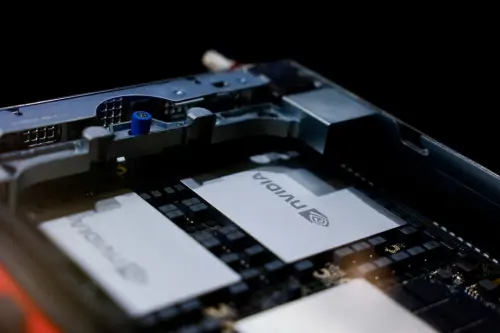

- Nvidia submitted multiple chips for the benchmark, alongside system builders such as Dell Technologies. However, there were no submissions from Advanced Micro Devices for the 405-billion-parameter benchmark, according to MLCommons data.

- Nvidia's latest AI server, dubbed Grace Blackwell, which houses 72 graphics processing units (GPUs), demonstrated speeds 2.8 to 3.4 times faster than the previous generation. This was noted even when only eight GPUs were used in the new server for a direct comparison with the older model, as stated at a Tuesday briefing.

- Nvidia is enhancing the chip connectivity within its servers, which is crucial for AI tasks where multiple chips function concurrently.

- The second benchmark also uses an open-source AI model from Meta and aims to closely replicate the performance expectations of consumer AI applications like ChatGPT. The objective is to reduce the benchmark's response time to near-instantaneous levels.

Conclusion

MLCommons is setting new standards with its benchmarks, pushing the boundaries of what is possible with AI hardware and software, and paving the way for faster and more efficient consumer AI applications.